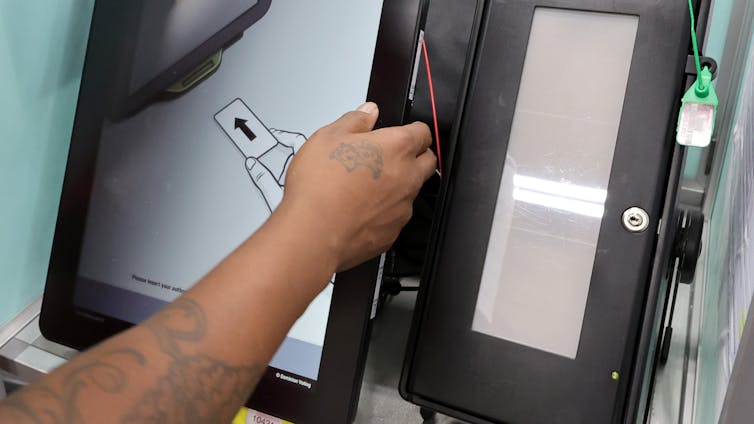

Ethan Miller/Getty Images

Ryan Shandler, Georgia Institute of Technology; Anthony J. DeMattee, Emory University, and Bruce Schneier, Harvard Kennedy School

American democracy runs on trust, and that trust is cracking.

Nearly half of Americans, both Democrats and Republicans, question whether elections are conducted fairly. Some voters accept election results only when their side wins. The problem isn’t just political polarization – it’s a creeping erosion of trust in the machinery of democracy itself.

Commentators blame ideological tribalism, misinformation campaigns and partisan echo chambers for this crisis of trust. But these explanations miss a critical piece of the puzzle: a growing unease with the digital infrastructure that now underpins nearly every aspect of how Americans vote.

The digital transformation of American elections has been swift and sweeping. Just two decades ago, most people voted using mechanical levers or punch cards. Today, over 95% of ballots are counted electronically. Digital systems have replaced poll books, taken over voter identity verification processes and are integrated into registration, counting, auditing and voting systems.

This technological leap has made voting more accessible and efficient, and sometimes more secure. But these new systems are also more complex. And that complexity plays into the hands of those looking to undermine democracy.

In recent years, authoritarian regimes have refined a chillingly effective strategy to chip away at Americans’ faith in democracy by relentlessly sowing doubt about the tools U.S. states use to conduct elections. It’s a sustained campaign to fracture civic faith and make Americans believe that democracy is rigged, especially when their side loses.

This is not cyberwar in the traditional sense. There’s no evidence that anyone has managed to break into voting machines and alter votes. But cyberattacks on election systems don’t need to succeed to have an effect. Even a single failed intrusion, magnified by sensational headlines and political echo chambers, is enough to shake public trust. By feeding into existing anxiety about the complexity and opacity of digital systems, adversaries create fertile ground for disinformation and conspiracy theories.

Testing cyber fears

To test this dynamic, we launched a study to uncover precisely how cyberattacks corroded trust in the vote during the 2024 U.S. presidential race. We surveyed more than 3,000 voters before and after election day, testing them using a series of fictional but highly realistic breaking news reports depicting cyberattacks against critical infrastructure. We randomly assigned participants to watch different types of news reports: some depicting cyberattacks on election systems, others on unrelated infrastructure such as the power grid, and a third, neutral control group.

The results, which are under peer review, were both striking and sobering. Mere exposure to reports of cyberattacks undermined trust in the electoral process – regardless of partisanship. Voters who supported the losing candidate experienced the greatest drop in trust, with two-thirds of Democratic voters showing heightened skepticism toward the election results.

But winners too showed diminished confidence. Even though most Republican voters, buoyed by their victory, accepted the overall security of the election, the majority of those who viewed news reports about cyberattacks remained suspicious.

The attacks didn’t even have to be related to the election. Even cyberattacks against critical infrastructure such as utilities had spillover effects. Voters seemed to extrapolate: “If the power grid can be hacked, why should I believe that voting machines are secure?”

Strikingly, voters who used digital machines to cast their ballots were the most rattled. For this group of people, belief in the accuracy of the vote count fell by nearly twice as much as that of voters who cast their ballots by mail and who didn’t use any technology. Their firsthand experience with the sorts of systems being portrayed as vulnerable personalized the threat.

It’s not hard to see why. When you’ve just used a touchscreen to vote, and then you see a news report about a digital system being breached, the leap in logic isn’t far.

Our data suggests that in a digital society, perceptions of trust – and distrust – are fluid, contagious and easily activated. The cyber domain isn’t just about networks and code. It’s also about emotions: fear, vulnerability and uncertainty.

Firewall of trust

Does this mean we should scrap electronic voting machines? Not necessarily.

Every election system, digital or analog, has flaws. And in many respects, today’s high-tech systems have solved the problems of the past with voter-verifiable paper ballots. Modern voting machines reduce human error, increase accessibility and speed up the vote count. No one misses the hanging chads of 2000.

But technology, no matter how advanced, cannot instill legitimacy on its own. It must be paired with something harder to code: public trust. In an environment where foreign adversaries amplify every flaw, cyberattacks can trigger spirals of suspicion. It is no longer enough for elections to be secure − voters must also perceive them to be secure.

That’s why public education surrounding elections is now as vital to election security as firewalls and encrypted networks. It’s vital that voters understand how elections are run, how they’re protected and how failures are caught and corrected. Election officials, civil society groups and researchers can teach how audits work, host open-source verification demonstrations and ensure that high-tech electoral processes are comprehensible to voters.

We believe this is an essential investment in democratic resilience. But it needs to be proactive, not reactive. By the time the doubt takes hold, it’s already too late.

Just as crucially, we are convinced that it’s time to rethink the very nature of cyber threats. People often imagine them in military terms. But that framework misses the true power of these threats. The danger of cyberattacks is not only that they can destroy infrastructure or steal classified secrets, but that they chip away at societal cohesion, sow anxiety and fray citizens’ confidence in democratic institutions. These attacks erode the very idea of truth itself by making people doubt that anything can be trusted.

If trust is the target, then we believe that elected officials should start to treat trust as a national asset: something to be built, renewed and defended. Because in the end, elections aren’t just about votes being counted – they’re about people believing that those votes count.

And in that belief lies the true firewall of democracy.

Ryan Shandler, Professor of Cybersecurity and International Relations, Georgia Institute of Technology; Anthony J. DeMattee, Data Scientist and Adjunct Instructor, Emory University, and Bruce Schneier, Adjunct Lecturer in Public Policy, Harvard Kennedy School

This article is republished from The Conversation under a Creative Commons license. Read the original article.