AP Photo/Juliana Yamada

Anjana Susarla, Michigan State University

U.S. state legislatures are where the action is for placing guardrails around artificial intelligence technologies, given the lack of meaningful federal regulation. The resounding defeat in Congress of a proposed moratorium on state-level AI regulation means states are free to continue filling the gap.

Several states have already enacted legislation around the use of AI. All 50 states have introduced various AI-related legislation in 2025.

Four aspects of AI in particular stand out from a regulatory perspective: government use of AI, AI in health care, facial recognition and generative AI.

Government use of AI

The oversight and responsible use of AI are especially critical in the public sector. Predictive AI – AI that performs statistical analysis to make forecasts – has transformed many governmental functions, from determining social services eligibility to making recommendations on criminal justice sentencing and parole.

But the widespread use of algorithmic decision-making could have major hidden costs. Potential algorithmic harms posed by AI systems used for government services include racial and gender biases.

Recognizing the potential for algorithmic harms, state legislatures have introduced bills focused on public sector use of AI, with emphasis on transparency, consumer protections and recognizing risks of AI deployment.

Several states have required AI developers to disclose risks posed by their systems. The Colorado Artificial Intelligence Act includes transparency and disclosure requirements for developers of AI systems involved in making consequential decisions, as well as for those who deploy them.

Montana’s new “Right to Compute” law sets requirements that AI developers adopt risk management frameworks – methods for addressing security and privacy in the development process – for AI systems involved in critical infrastructure. Some states have established bodies that provide oversight and regulatory authority, such as those specified in New York’s SB 8755 bill.

AI in health care

In the first half of 2025, 34 states introduced over 250 AI-related health bills. The bills generally fall into four categories: disclosure requirements, consumer protection, insurers’ use of AI and clinicians’ use of AI.

Bills about transparency define requirements for information that AI system developers and organizations that deploy the systems disclose.

Consumer protection bills aim to keep AI systems from unfairly discriminating against some people, and ensure that users of the systems have a way to contest decisions made using the technology.

VCG via Getty Images

Bills covering insurers provide oversight of the payers’ use of AI to make decisions about health care approvals and payments. And bills about clinical uses of AI regulate use of the technology in diagnosing and treating patients.

Facial recognition and surveillance

In the U.S., a long-standing legal doctrine that applies to privacy protection issues, including facial surveillance, is to protect individual autonomy against interference from the government. In this context, facial recognition technologies pose significant privacy challenges as well as risks from potential biases.

Facial recognition software, commonly used in predictive policing and national security, has exhibited biases against people of color and consequently is often considered a threat to civil liberties. A pathbreaking study by computer scientists Joy Buolamwini and Timnit Gebru found that facial recognition software poses significant challenges for Black people and other historically disadvantaged minorities. Facial recognition software was less likely to correctly identify darker faces.

Bias also creeps into the data used to train these algorithms, for example when the composition of teams that guide the development of such facial recognition software lack diversity.

By the end of 2024, 15 states in the U.S. had enacted laws to limit the potential harms from facial recognition. Some elements of state-level regulations are requirements on vendors to publish bias test reports and data management practices, as well as the need for human review in the use of these technologies.

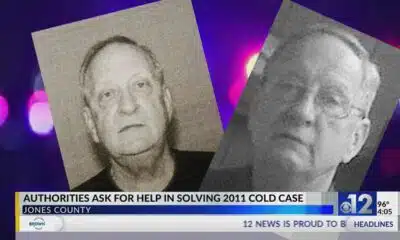

AP Photo/Carlos Osorio

Generative AI and foundation models

The widespread use of generative AI has also prompted concerns from lawmakers in many states. Utah’s Artificial Intelligence Policy Act requires individuals and organizations to clearly disclose when they’re using generative AI systems to interact with someone when that person asks if AI is being used, though the legislature subsequently narrowed the scope to interactions that could involve dispensing advice or collecting sensitive information.

Last year, California passed AB 2013, a generative AI law that requires developers to post information on their websites about the data used to train their AI systems, including foundation models. Foundation models are any AI model that is trained on extremely large datasets and that can be adapted to a wide range of tasks without additional training.

AI developers have typically not been forthcoming about the training data they use. Such legislation could help copyright owners of content used in training AI overcome the lack of transparency.

Trying to fill the gap

In the absence of a comprehensive federal legislative framework, states have tried to address the gap by moving forward with their own legislative efforts. While such a patchwork of laws may complicate AI developers’ compliance efforts, I believe that states can provide important and needed oversight on privacy, civil rights and consumer protections.

Meanwhile, the Trump administration announced its AI Action Plan on July 23, 2025. The plan says “The Federal government should not allow AI-related Federal funding to be directed toward states with burdensome AI regulations … ”

The move could hinder state efforts to regulate AI if states have to weigh regulations that might run afoul of the administration’s definition of burdensome against needed federal funding for AI.

Anjana Susarla, Professor of Information Systems, Michigan State University

This article is republished from The Conversation under a Creative Commons license. Read the original article.