News from the South - Kentucky News Feed

Louisville mom goes viral sharing baby’s rare diagnosis on TikTok

SUMMARY: A Louisville mother is raising awareness about her son Vincent’s rare genetic disorder, Bosma Aurinia Microphtalmia Syndrome (BAM), which causes congenital absence of the nose and eye problems. With fewer than 100 cases worldwide, Vincent faced multiple surgeries after a month in the NICU, including trachea and G-tube placement. His mother, Madeline, began sharing their journey on TikTok in May 2025, gaining nearly 2 million views and connecting with other families facing medical complexities. She hopes Vincent will grow, meet milestones, and live a normal life, celebrating his first birthday recently.

Louisville mom goes viral sharing baby’s rare diagnosis on TikTok

Subscribe to WLKY on YouTube now for more: http://bit.ly/1e5KyMO

Get more Louisville news: http://www.wlky.com

Like us: http://www.facebook.com/wlkynews

Follow us: http://twitter.com/WLKY

Instagram: https://www.instagram.com/wlky/

News from the South - Kentucky News Feed

What could a street art program look like in Lexington?

SUMMARY: On August 12th, Principal Planner Hannah Crepps will present plans for a new street art program in Lexington at the Environmental Quality and Public Works Committee meeting. The program aims to formalize street art projects, which many U.S. cities use to calm traffic and reduce crashes by incorporating painted crosswalks and road designs. Lexington will draft guidelines including funding sources, community decision-making, and traffic safety compliance. A pilot chalk art project was tested in May 2025 on Shropshire Avenue. Residents can engage with the program by contacting Crepps and watching the live meeting online or in person.

The post What could a street art program look like in Lexington? appeared first on lexingtonky.news

News from the South - Kentucky News Feed

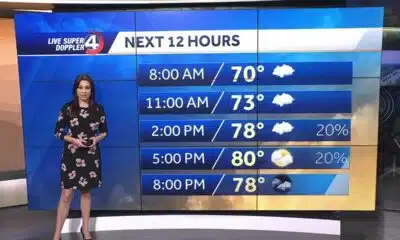

Evening Forecast 8/9/2025

SUMMARY: The evening forecast for central Kentucky on August 9, 2025, shows mostly clear skies with isolated downpours having fizzled out. Temperatures remain warm, in the mid-70s this evening, dipping to mid-upper 60s overnight with patchy fog possible in valleys. Sunday will bring steamy sunshine, a mix of sun and clouds, highs near 90°F, and isolated showers. Early next week remains typical August weather with spotty downpours Monday, a few storms Tuesday, and increased scattered rain chances Wednesday and Thursday, coinciding with many schools reopening. Overall, dry and calm conditions prevail tonight and Sunday, with rain chances rising midweek.

Evening Forecast 8/9/2025 Subscribe to FOX 56 News on YouTube: …

News from the South - Kentucky News Feed

EU’s new AI code of practice could set regulatory standard for American companies

by Paige Gross, Kentucky Lantern

August 9, 2025

American companies are split between support and criticism of a new voluntary European AI code of practice, meant to help tech companies align themselves with upcoming regulations from the European Union’s landmark AI Act.

The voluntary code, called the General Purpose AI Code of Practice, which rolled out in July, is meant to help companies jump-start their compliance. Even non-European companies will be required to meet certain standards of transparency, safety, security and copyright compliance to operate in Europe come August 2027.

Many tech giants have already signed the code of practice, including Amazon, Anthropic, OpenAI, Google, IBM, Microsoft, Mistral AI, Cohere and Fastweb. But others have refused.

In July, Meta’s Chief Global Affairs Officer Joel Kaplan said in a statement on Linkedin that the company would not commit.

“Europe is heading down the wrong path on AI. We have carefully reviewed the European Commission’s Code of Practice for general-purpose AI (GPAI) models and Meta won’t be signing it,” he wrote. “This Code introduces a number of legal uncertainties for model developers, as well as measures which go far beyond the scope of the AI Act.”

Though Google’s President of Global Affairs Kent Walker was critical of the code of practice in a company statement, Google has signed it, he said.

“We remain concerned that the AI Act and Code risk slowing Europe’s development and deployment of AI,” Walker wrote. “In particular, departures from EU copyright law, steps that slow approvals, or requirements that expose trade secrets could chill European model development and deployment, harming Europe’s competitiveness.”

The divergent approach of U.S. and European regulators has showcased a clear difference in attitude about AI protections and development between the two markets, said Vivien Peaden, a tech and privacy attorney with Baker Donelson.

She compared the approaches to cars — Americans are known for fast, powerful vehicles, while European cars are stylish and eco-friendly.

“Some people will say, I’m really worried that this engine is too powerful. You could drive the car off a cliff, and there’s not much you can do but to press the brake and stop it, so I like the European way,” Peaden said. “My response is, ‘Europeans make their car their way, right? You can actually tell the difference. Why? Because it was designed with a different mindset.”

While the United States federal government has recently enacted some AI legislation through the Take It Down Act, which prohibits AI-generated nonconsensual depictions of individuals, it has not passed any comprehensive laws on how AI may operate. The Trump administration’s recent AI Action Plan paves a clear way for AI companies to continue to grow rapidly and unregulated.

But under the EU’s AI Act, tech giants like Amazon, Google and Meta will need to be more transparent about how their models are trained and operated, and follow rules for managing systemic risks if they’d like to operate in Europe.

“Currently, it’s still voluntary,” Peaden said. “But I do believe it’s going to be one of the most influential standards in AI’s industry.”

General Purpose AI Code of Practice

The EU AI Act was passed last year to mitigate risk created by AI models, and the law creates “strict obligations” for models that are considered “high risk.” High risk AI models are those that can pose serious risks to health, safety or fundamental rights when used for employment, education, biometric identification and law enforcement, the act said.

Some AI practices, including AI-based manipulation and deception, predictions of criminal offenses, social scoring, emotion recognition in workplaces and educational institutions and real-time biometric identification for law enforcement, are considered “unacceptable risk” and are banned from use in the EU altogether.

Some of these practices, like social scoring — using an algorithm to determine access to certain privileges or opportunities like mortgages or jail time — are widely used, and often unregulated in the United States.

While AI models that will be released after Aug. 2 already have to comply with the EU AI Act’s standards, large language models (LLMs) — the technical foundation of AI models — released before that date have through August 2027 to fully comply. The code of practice released last month offers a voluntary way for companies to get into compliance early, and with more leniency than when the 2027 deadline hits, it says.

The three chapters in the code of practice are transparency, copyright and safety, and security. The copyright requirements are likely where American and European companies are highly split, said Yelena Ambartsumian, founder of tech consultancy firm Ambart Law.

In order to train LLMs, you need a broad, high-quality dataset with good grammar, Ambartsumian said. Many American LLMs turn to pirated collections of books.

“So [American companies] made a bet that, instead of paying for this content, licensing it, which would cost billions of dollars, the bet was okay, ‘we’re going to develop these LLMs, and then we’ll deal with the fallout, the lawsuits later,” Ambartsumain said. “But at that point, we’ll be in a position where, because of our war chest, or because of our revenue, we’ll be able to deal with the fallout of this fair use litigation.”

And those bets largely worked out. In two recent lawsuits, Bartz v. Anthropic and Kadrey v. Meta, judges ruled in favor of the AI developers based on the “fair use” doctrine, which allows people to use copyrighted material without permission in certain journalistic or creative contexts. In AI developer Anthropic’s case, Judge William Alsup likened the training process to how a human might read, process, and later draw on a book’s themes to create new content.

But the EU’s copyright policy bans developers from training AI on pirated content and says companies must also comply with content owners’ requests to not use their works in their datasets. It also outlines rules about transparency with web crawlers, or how AI models rake through the internet for information. AI companies will also have to routinely update documentation about their AI tools and services for privacy and security.

Those subject to the requirements of the EU’s AI Act are general purpose AI models, nearly all of which are large American corporations, Ambartsumain said. Even if a smaller AI model comes along, it’s often quickly purchased by one of the tech giants, or they develop their own versions of the tool.

“I would also say that in the last year and a half, we’ve seen a big shift where no one right now is trying to develop a large language model that isn’t one of these large companies,” Ambartsumain said.

Regulations could bring markets together

There’s a “chasm” between the huge American tech companies and European startups, said Jeff Le, founder and managing partner of tech policy consultancy 100 Mile Strategies LLC. There’s a sense that Europe is trying to catch up with the Americans who have had unencumbered freedom to grow their models for years.

But Le said he thinks it’s interesting that Meta has categorized the code of practice as overreach.

“I think it’s an interesting comment at a time where Europeans understandably have privacy and data stewardship questions,” Le said. “And that’s not just in Europe. It’s in the United States too, where I think Gallup polls and other polls have revealed bipartisan support for consumer protection.”

As the code of practice says, signing now will reduce companies’ administrative burden when the AI Act goes into full enforcement in August 2027. Le said that relationships between companies that sign could garner them more understanding and familiarity when the regulatory burdens are in place.

But some may feel the transparency or copyright requirements could cost them a competitive edge, he said.

“I can see why Meta, which would be an open model, they’re really worried about (the copyright) because this is a big part of their strategy and catching up with OpenAI and (Anthropic),” Le said. “So there’s that natural tension that will come from that, and I think that’s something worth noting.”

Le said that the large AI companies are likely trying to anchor themselves toward a framework that they think they can work with, and maybe even influence. Right now, the U.S. is a patchwork of AI legislation. Some of the protections outlined in the EU AI Act are mirrored in state laws, but there’s no universal code for global companies.

The EU’s code of practice could end up being that standard-setter, Peaden said.

“Even though it’s not mandatory, guess what? People will start following,” she said. “Frankly, I would say the future of building the best model lies in a few other players. And I do think that … if four out of five of the primary AI providers are following the general purpose AI code of practice, the others will follow.”

Editor’s note: This item has been modified to revise comments from Jeff Le.

Kentucky Lantern is part of States Newsroom, a nonprofit news network supported by grants and a coalition of donors as a 501c(3) public charity. Kentucky Lantern maintains editorial independence. Contact Editor Jamie Lucke for questions: info@kentuckylantern.com.

The post EU’s new AI code of practice could set regulatory standard for American companies appeared first on kentuckylantern.com

Note: The following A.I. based commentary is not part of the original article, reproduced above, but is offered in the hopes that it will promote greater media literacy and critical thinking, by making any potential bias more visible to the reader –Staff Editor.

Political Bias Rating: Centrist

The content presents a balanced overview of the differing approaches to AI regulation between the European Union and the United States, highlighting perspectives from various stakeholders including tech companies, legal experts, and policymakers. It neither strongly advocates for heavy regulation nor champions unregulated innovation, instead providing context on the complexities and trade-offs involved. The article maintains a neutral tone, focusing on factual reporting and multiple viewpoints without evident partisan framing.

-

Mississippi Today6 days ago

After 30 years in prison, Mississippi woman dies from cancer she says was preventable

-

News from the South - Texas News Feed6 days ago

Texas redistricting: What to know about Dems’ quorum break

-

News from the South - North Carolina News Feed4 days ago

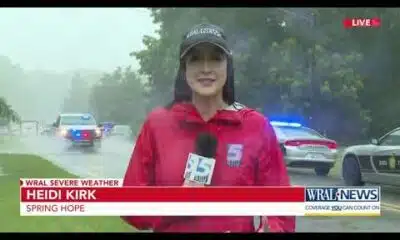

Two people unaccounted for in Spring Lake after flash flooding

-

News from the South - Florida News Feed6 days ago

Warning for social media shoppers after $22K RV scam

-

News from the South - Georgia News Feed6 days ago

Georgia lawmakers to return this winter to Capitol chambers refreshed with 19th Century details

-

Mississippi Today5 days ago

Brain drain: Mother understands her daughters’ decisions to leave Mississippi

-

News from the South - Louisiana News Feed6 days ago

Plans for Northside library up for first vote – The Current

-

News from the South - Arkansas News Feed6 days ago

Op-Ed: U.S. District Court rightfully blocked Arkansas’ PBM ban | Opinion